We all know LLMs are magically powerful in generating text that sounds pretty much like a human expert wrote it. As long as you are not an expert yourself in the specific domain, meaning you won’t pay attention to errors or inconsistencies in the text. Still, in most situations in life you’re not going to have access to a human expert directly when the need arises. The Best Available Human standard proposed by Ethan Mollick in 2023 for evaluating the usefulness of AI is still a solid way to look at it.

Now it’s 2025, though. The year of AI agents. None of the valuations of AI labs nor the investments made by hyperscalers into AI infrastructure could be justified with those text response capabilities alone. AI must be able to do things, so that it could eventually reduce costs from business processes where human labor is today the primary expense.

I have a pragmatic and critical approach to GenAI, meaning I rarely believe what the vendors themselves are pitching, without seeing further evidence. Yet even I sometimes forget how far we still are from an AI assistant that could reliably do some of the mundane office work for me. In this post I’ll go through a few unexpected failures I experienced recently with M365 Copilot and other similar assistants that reminded me about the gaps between vision and reality.

There are a lot of similarities between the Metaverse and GenAI when it comes to market expectations. We shouldn’t forget that merely 3 years ago, McKinsey predicted Metaverse to generate up to $5 trillion in value by 2030. Sure… Then, ChatGPT came along and now GenAI will be the value driver instead. Whereas the avatars in Metaverse arenas were famous for having no legs, I can’t help thinking that the AI assistants of today mostly have no hands. Because of how they only have eyes and ears to consume data, yet no way to put that into action without the user.

Let’s look at three things I expected to be able to do with Copilot in 2025 by just prompting my friendly AI assistant:

Turning free-text emails into calendar entries

Comparing two lists of domains

Talking to my phone and learning what’s on my agenda

Either AI failed me, or I’m just a failure when it comes to using modern technology. You be the judge.

“Hey Copilot, create a calendar entry for me”

Every corporation is telling their employees to think AI-first and find ways to be more productive with the great new tools that have been graciously given to them. Especially if you’re working at Microsoft, Amazon, or Google, where the spend on AI infrastructure would look particularly bad if not even your own teams could gain benefits from it.

I work in a company of one, yet I do pay for the $30 per month premium M365 Copilot subscription. I need to convince myself it does something that can reduce manual steps I have to take. So, the other day I wanted to try to leverage AI to avoid having to manually create a calendar entry based on a car maintenance reservation email that I received in my inbox.

The experience was… terrible. Which is of course a great reason to build a graphic illustration about the process:

How to create a calendar event from an email message with M365 Copilot in 10 easy steps!

The first hurdle was the mobile experience. While on Windows Outlook I can already chat about "this email", there's no such option to provide context in the phone app. And I was sitting comfortably on the couch, trying to be AI-first and increase my productivity. No, there had to be a way to make this happen with M365 Copilot app on my Android phone.

Turns out there was - but it took 10 steps.

The first 4 attempts to get Copilot to pick the right message were a failure. In its usual style, LLMs like Copilot enjoy playing a game of gaslighting, claiming that data which is there isn't actually there. On the 5th prompt, I found another way for Copilot to indirectly retrieve the message I wanted. Not directly asking it for a specific email, rather letting it be a proper assistant and tell me my latest emails in the inbox.

Now, the actual step from where I would have liked to start from: asking my AI assistant to create a calendar event. At first, Copilot is right on the money and tells me "I've created a calendar entry for you". Except it didn’t do it.

Nothing had been created on my calendar, as was revealed in response nr. 7. Copilot had just found an answer that was most pleasing to the user. In step 8 I repeated that I really, truly want a real calendar entry. Finally, Copilot took this seriously, called all sorts of analysis tools, and finally responded "here's an .ICS file you can import to your calendar".

Right… Well. Okay. iCalendar is a standard format and it’s nice to see Microsoft defaulting to it. It’s just that as a platform provider for the email, the calendar, the AI assistant and everything, they could be expected to make the process smoother for a user.

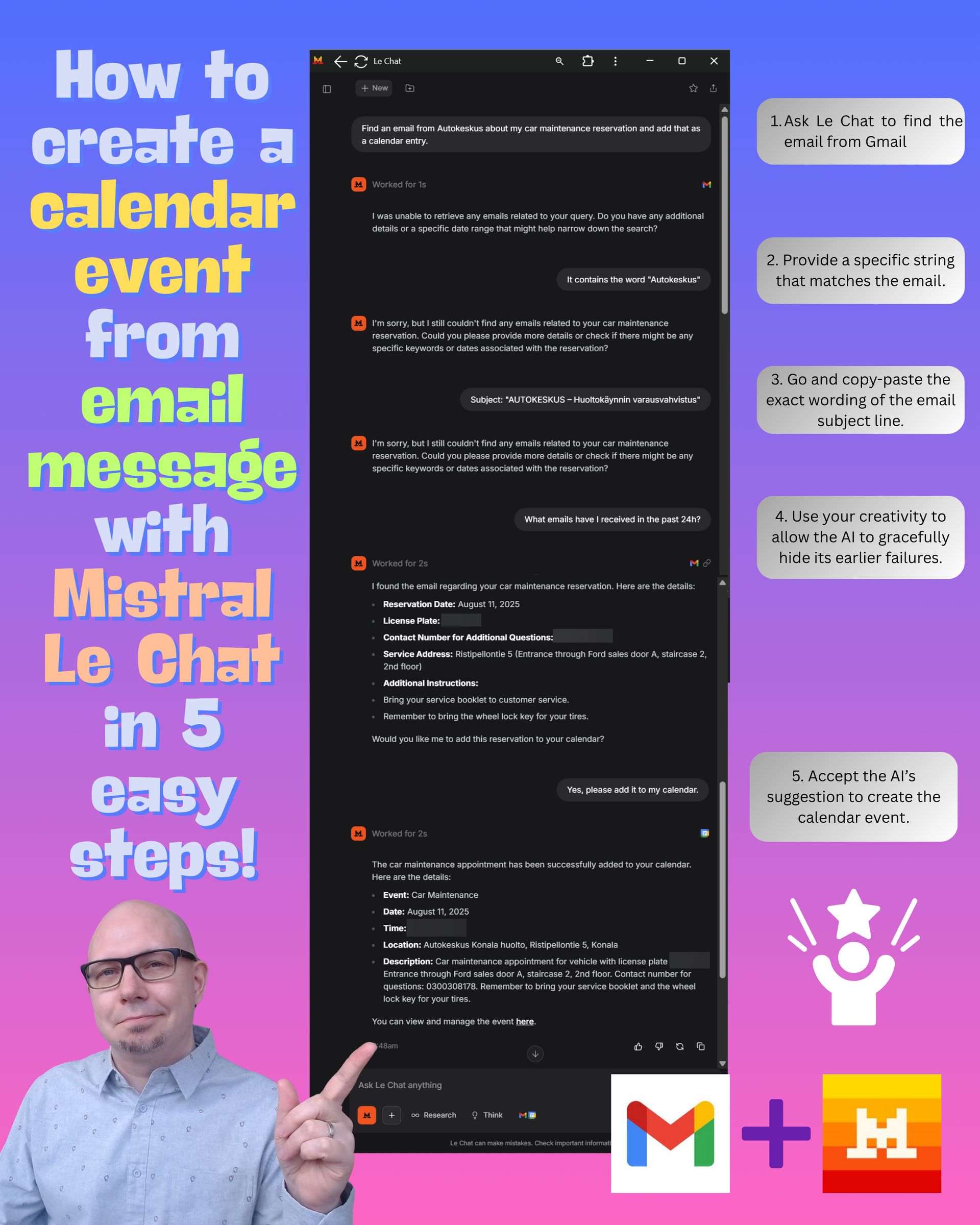

For comparison, I wanted to test a different kind of AI vendor. The European option of Le Chat from Mistral. Their AI chatbot offers connectors to Google email and calendar without paying for anything. Here was the same process when using Le Chat and Gmail:

How to create a calendar event from email message with Mistral Le Chat in 5 easy steps!

At first, it started just as badly as with Copilot. Providing the topic, a specific keyword, the exact subject line string - nothing helped Le Chat locate the right email message from Gmail. But luckily, the exact same roundabout way of querying the emails worked here, too: “What emails have I received in the past 24h?”

Now, Le Chat found the right email immediately (and skipped showing me any irrelevant ones). “Would you like me to add this reservation to your calendar?” Yes, please. “Okay, done!”.

Wow! It actually worked! In 5 steps, there was a real event in Google Calendar with the right data. Just as it should have worked within Microsoft ecosystem, too. Now, even though 5 steps is a lot less than 10, the biggest thing was that the AI did not try to pretend like it completed the work and thus create a potentially damaging outcome for the user (me missing my oil change reservation and having to reschedule / pay a fee).

I had to test this further in the Windows Outlook client where the full M365 Copilot Chat is today available. With another fake email, just to ensure there was no memory or cache interfering with the results. Sure enough, the default response from Copilot to the “create a calendar entry” prompt is always a blatant lie:

M365 Copilot Chat in Outlook telling me it created a calendar entry (no, it didn’t).

Technically, it’s not a surprise that LLMs hallucinate things that aren’t real. Commercially, it feels like a massive failure of Microsoft. They haven’t managed to develop and implement guardrails to protect users in what I consider the most obvious scenario imaginable to use a personal AI assistant like Copilot.

I see discussions about similar issues on LinkedIn and occasionally participate in them. The common theme seems to be that tech experts from Microsoft and partners have valid explanations on why these issues are happening. And I totally get it that there are limitations, such as how much data Graph API can return to the agent. And every time I can’t help but ask: if people know about these technical limits, why can’t that knowledge be present within Copilot itself? Why couldn’t it help pointing out tasks that it isn’t capable of handling?

Obviously, there are ways to block harmful content from being generated by LLMs in the cloud. Is it technically impossible to also not let Copilot tell the user it did something when that is not within its realm of capabilities? I bet the reasons are more on the commercial side, since no legal liability currently exists for such outputs, whereas responses containing hate speech etc. would land the vendors in hot water.

“Hey Copilot, process this text data for me”

Let’s step back from the world of agents that perform work across systems and look at a use case that doesn’t require anything but a chat response. Because of how the recent AI models are promoted to be useful for all kinds of deep research work and crunching data, I wanted to use AI to complete a task that involved two lists of domains.

Subscribe to Plus to read the rest.

Become a paying subscriber of Perspectives Plus to get access to this post and other subscriber-only content.

Upgrade