Before the public cloud and SaaS were mainstream, I was a CRM business specialist who needed the IT department to provide a set of Windows servers to run the new CRM software I was in charge of deploying to the organization. When I saw how Microsoft’s CRM Online (now Dynamics 365) made it possible to skip this and just provision a trial account for the service, I was sold.

Before that job, I was the marketing guy who got assigned the task of also managing all the IT systems of a small org. I inherited a set of Lotus Domino servers literally running in the cleaning closet. When I finally managed to complete the project of moving the Notes-based business software to Exchange, SharePoint and Dynamics CRM running on our servers that were not in the closet but in a rack managed by a hosting company, I was relieved.

Going through these experiences early on in my career, it seemed like the further away I could get from the servers, the better the outcome was for achieving value from them. Focus on the business processes, design business apps that support and automate them. Empower every user to become a citizen developer and build their own apps. Access data and tools from any device, anywhere. All made possible by the cloud.

Lately, I’ve seen how the cloud isn’t all you need. That there is value in having control over the computers that store your data and run your tools. And that it isn’t scary to work with command line tools instead of pretty web portals — because LLMs have our back

You can just script things

When I got my first PC, there was no graphical UI. There also wasn’t an internet from where I could search for answers on what to do when faced with the C:\> prompt in MS-DOS command line. Books, magazines, and later on BBS forums were filled with helpful information, though, so I learned to get around. Tweaking the settings so there’d be enough memory for my PC games and that the sound card would work for my Fast Tracker studio.

I’ve never enjoyed learning any specific syntax by heart, which must be one reason I never bothered to learn how to create my own programs. Writing code was for The Other People. I was motivated by figuring out what all these awesome tools programmed by others could do when put into use. I need to see things in action — or at the very least see a UI that presents the possible actions to me. Knowing what commands to punch into the black terminal window had none of that.

The flipside of this is that I’m also very picky about the UX. When the app gets in my way and forces me to jump through unnecessary hoops or refuses to show me the information it obviously should, you’ll hear me shouting the F-word very loudly. (Luckily my private office is pretty well isolated from the neighbors.) As a result, I often spend considerable amounts of time in a “there’s gotta be a smarter way” mode, researching for less stupid ways to achieve my goals.

AI chatbots are great at looking up very specific answers online to meet the requirements expressed to them in the prompt. The one thing they do (and which search engines don’t) is offer to write a script for completing a task. Because to a large language model it’s the most natural way to express a solution as a set of written commands. Based on the wealth of training data on command line syntaxes and API docs they’ve been fed.

This makes tools like ChatGPT and Claude a very handy partner to complement the shortcomings I have with being able to memorize specific strings. Sure, they do imagine things, yet usually the errors will be revealed upon trying to execute the scripts. The kinds of things you can do with just a PowerShell script rather than juggling dedicated apps and trying to manually process files is incredible. And if you need to make adjustments to the output or process for getting what you really needed, just run the thing again.

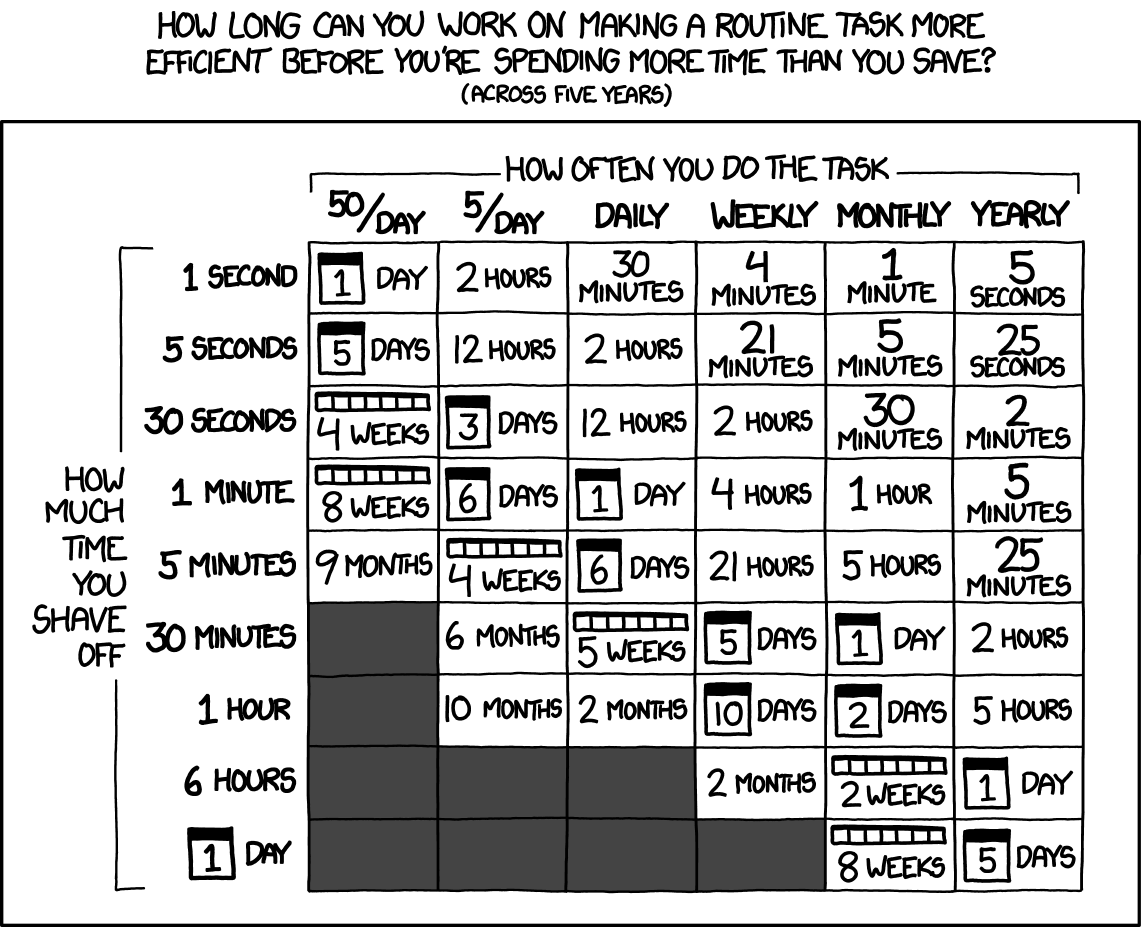

The above XKCD matrix is about “How long can you work on making a routine task more efficient before you're spending more time than you save? (across five years)”. It almost makes me dizzy thinking how the practical equation has changed when LLMs allow us to prompt scripts for us. Note: I’m not talking about using non-deterministic GenAI for the task itself. It’s about using GenAI to create a deterministic tool.

The original 5-year spectrum of the comic is outdated. It certainly was a sensible threshold to illustrate the original point of how computer geeks often end up spending far more time on building the automation than what benefits could be reaped from it. That was an era when it was hard to get much done in less than 5 minutes if you weren’t a true expert in the domain.

Today, the tools that you could prompt into existence in just 5 minutes can do a lot. For a monthly routine task, you’d only need to save 5 seconds per run to have a positive ROI on time spent. Anything that takes a minute of your time on a monthly basis would be worth an hour spent with an LLM and a terminal window. The key takeaway is: even little things are worth a shot now. If it doesn’t work after a few minutes, just leave it be as-is and you’ve lost barely anything with the experiment (and you learned something about practical AI usage).

Most of this would still require a level of intimacy with raw scripts and dark terminals to get true benefits out of. Traditionally, it would have been hard to expect users who’ve spent their computing days inside apps to suddenly start working from the command line. The rise of tools like Claude Code and their popularity outside the professional coders seems to now be turning the tide at least a bit towards the dark side of blinking cursors. I wrote more about the power of the terminal UI (TUI) in my previous newsletter issue for the Plus subscribers:

Your computer, trapped inside an OS

The wonderful promise with the cloud is that the computer is someone else’s problem to manage. Things may not always work the way you wish in SaaS apps, yet when something breaks down, you can just spend time elsewhere while someone up there in the cloud control tower will fix it.

When building the tools locally and taking ownership of the scripts and code, there’s no one else there to fix it. Unfortunately, this doesn’t mean that no one else could break your tools. Because that’s what’s been happening far too often lately with Windows updates. Microsoft can’t seem to get over all the quality issues that plague Windows 11 features and hotfixes.

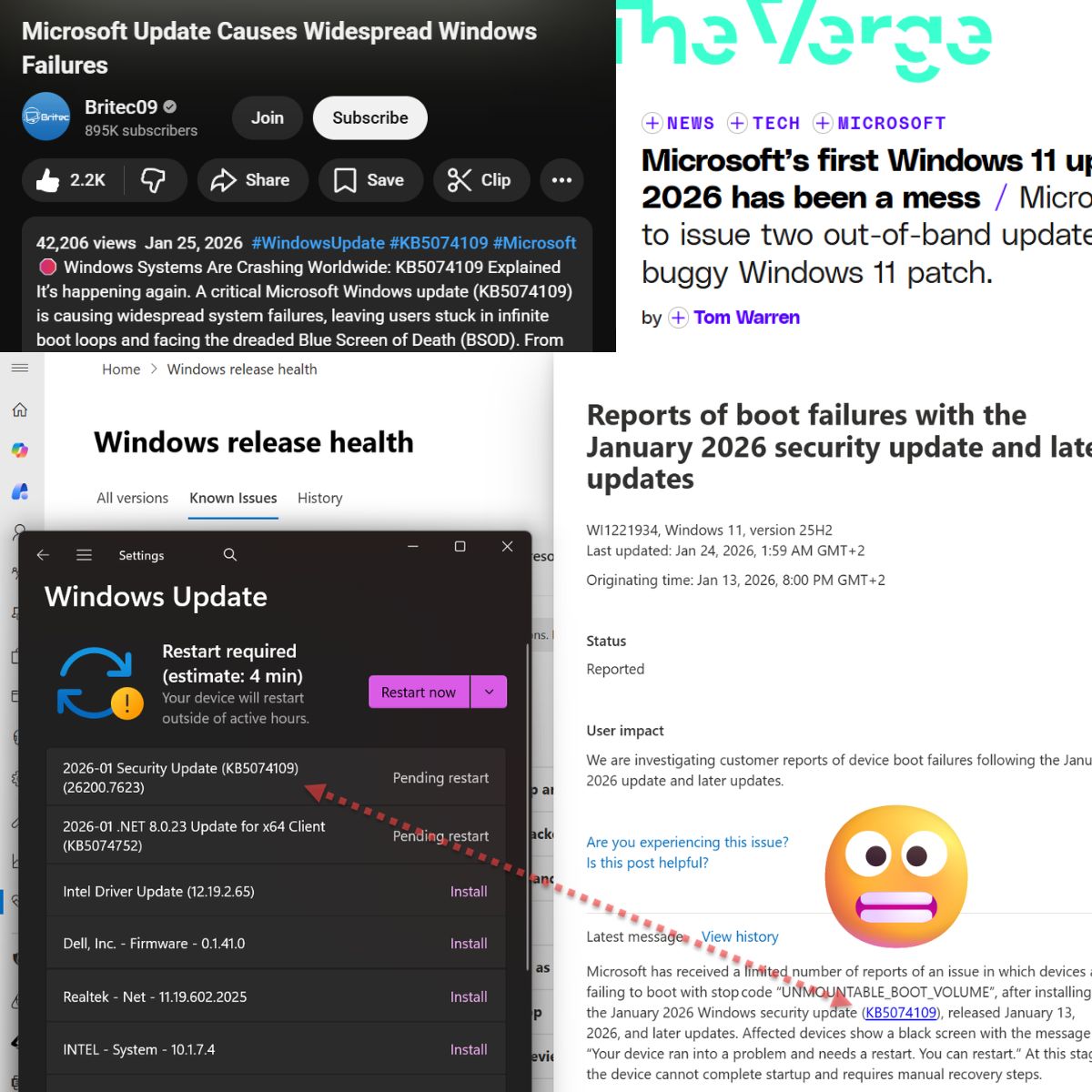

Another week, another risk of your computer not being able to boot to Windows because someone at Microsoft forgot to test things properly:

Windows Update asking me to finalize the notorious KB5074109 update with a reboot.

It’s not any fringe issue with an exotic configuration. This video demonstrates how a brand new Windows 11 VM provisioned solely for testing purposes gets stuck with a bad update that can’t be resolved via any other route than resetting the PC. Friends of Linux are naturally sharing such horror stories as proof that they’ve made the right choice in the OS powering their own devices:

“In fairness, with Linux I have to do my own patching to make my computer unable to boot. That has been a lot of effort for me in the past, but I have occasionally pulled it off. MS is providing this as a service.”

What can we do when a cloud service out there pushes yet another potentially destructive Windows update onto your PC? Three weeks ago I faced a BSOD loop on my main work PC that couldn’t be resolved via any other means than a full wipe of the C-drive and a new Windows 11 installation. I’m painfully aware of how much installation and configuration work is required after the OS finally boots up. I’ve easily lost at least 2 full working days in restoring my tools back to how they were.

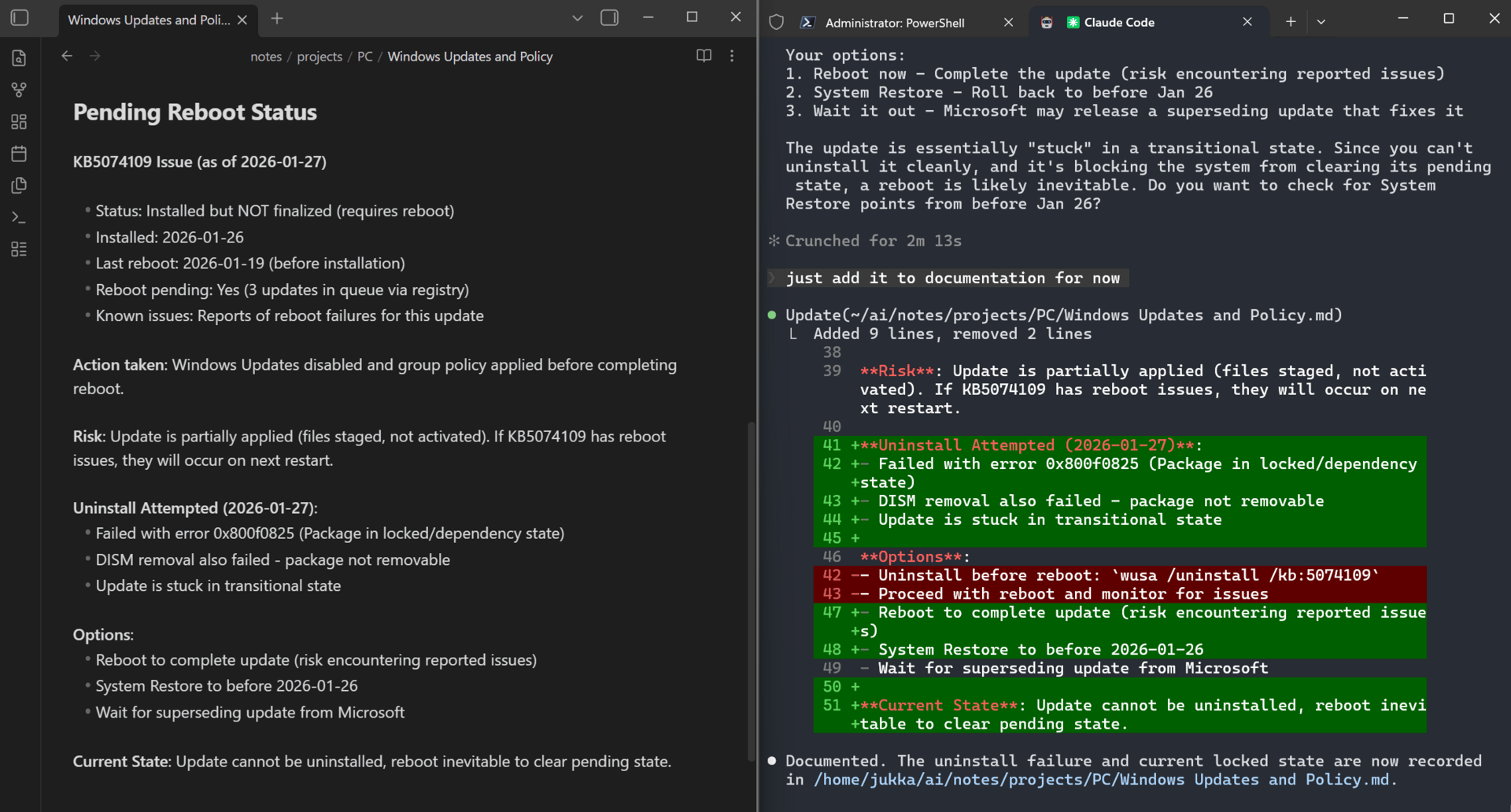

I have naturally asked AI for assistance with this conundrum. While I have no way to guarantee that a full wipe of the system drive won’t be required again in the future, I can at least take more control over my PC via the command line. For example, getting exact data about the update status with Claude Code CLI is a breeze. It can suggest and try means to undo the damage of surprise updates, even if in this case nothing seems to protect my PC from the KB5074109 that has been installed and will be applied in the next reboot.

Obsidian notebook with my PC’s update log and installation status, as documented by Claude.

These days, getting all the apps installed on a fresh new Windows machine (or a wiped one) is a breeze with WinGet. I’m sure some IT nerds have been using this for ages already, yet an LLM makes this approachable for everyone. Claude will gladly catalog and group the apps you use into a script file that can be run whenever you need to start from scratch. And to keep it up to date, all you need to do is ask AI in plain English. Or again build this all into an automated process if you expect to need it often.

The CLI tools are powerful because they have access to your computer. And at the same time they are vulnerable to whatever happens to your computer. Because while tools like Claude or Codex run the model inference in the cloud, you still must have something running and configured locally.

With great power comes great vulnerability

The most extreme example of taking advantage of local tools together with cloud LLMs is Moltbot — formerly known as ClawdBot, before Anthropic’s lawyers got in touch with the developer. There certainly are other YOLO style research preview features out there from even the vendors. It’s just that Cla… sorry, Moltbot managed to go viral all on its own. It captured the attention of not only the AI cheerleaders but also techies that had been previously disappointed by what “agentic” AI products from big vendors were delivering.

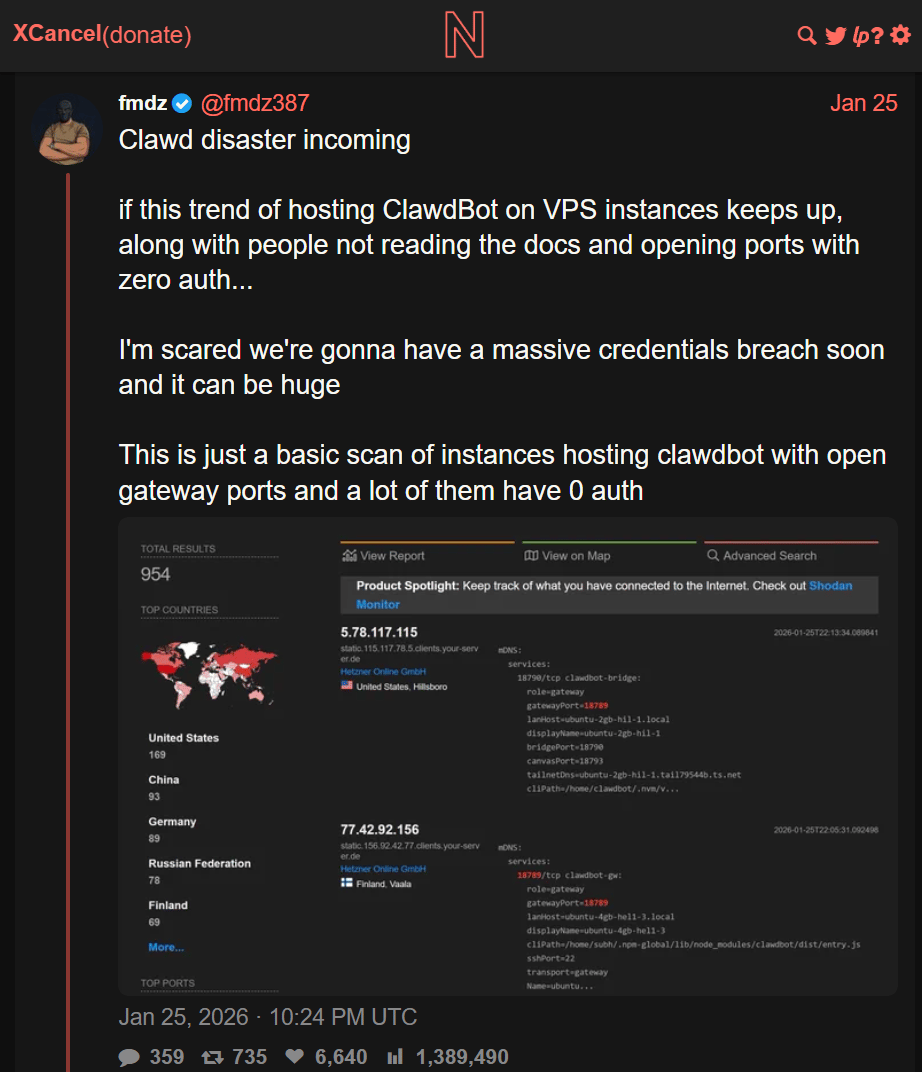

Because vibe scripting your own virtual server is pretty simple these days, deployments of Moltbot started to appear on the cybersecurity radar, too. Because of how carelessly the servers had been configured:

“Many people are running ClawdBot on their own virtual servers and exposing it directly to the internet. They are opening network ports without any authentication: No password, no token, no access control. Anyone who finds those servers can connect to them.

Tools like Shodan make this trivial. You can scan the internet and instantly see hundreds of ClawdBot instances that are publicly reachable. Some of them potentially contain API keys, credentials, logs, or internal data.”

There has been no breakthrough yet on how to tackle the combination of extreme insecurity of naive LLM models following orders from anyone. So, even if the ports of personal AI servers weren’t left wide open, the problem still remains: if you let an AI with access to tools and your secrets read incoming email from the outside world, there’s nothing stopping malicious actors from giving orders to your server.

Agent 365: a computer for AI workers

Microsoft could offer useful service to address this very scenario. It’s the reason why I still have hopes of Agent 365 becoming a viable product, regardless of the numerous delays and false marketing claims surrounding the launch of A365 agents. Just because it’s not easy to make it work, doesn’t mean Microsoft couldn’t eventually succeed.

Subscribe to Plus to read the rest.

Become a paying subscriber of Perspectives Plus to get access to this post and other subscriber-only content.

Upgrade